Building a Financial Crime Detection Pipeline Using Apache Kafka

Learn how to design and implement a real-time financial crime detection pipeline using Apache Kafka for streaming transaction data. This blog covers core components such as high-throughput ingestion, scalable event processing, anomaly detection logic, and alerting mechanisms tailored for compliance and fraud prevention use cases.

REAL-TIME ANALYTICS & EVENT-DRIVEN ARCHITECTUREUSE CASES & MVP STORIES

Kiran Yenugudhati

2/9/20252 min read

This blog walks through the design of a real-time financial crime detection pipeline using Apache Kafka to ingest, stream, and analyze transaction data.

You’ll learn how to:

Ingest high-velocity transactions using Kafka

Process events in real-time for anomaly detection

Trigger alerts or downstream actions

Build an architecture that supports scalability, compliance, and auditability

🔍 Why Real-Time FinCrime Detection Matters

Traditional batch-based fraud detection systems are:

Too slow to block fraud in progress

Hard to scale under high transaction volumes

Often disconnected from operational systems

A real-time pipeline using Kafka enables you to:

Monitor live transaction streams

Flag suspicious behavior instantly

Reduce financial loss and improve compliance

Enable proactive fraud and AML (anti-money laundering) response

🧰 Core Components

Ingestion : Apache Kafka (transactions topic)

Processing: Spark Structured Streaming / Flink / Databricks

Detection Logic: Rule-based or ML model (fraud scoring)

Storage: Delta Lake / Snowflake / Databricks

Alerting: Kafka topics / REST APIs / Slack / Streamlit

🛠️ Step-by-Step Architecture

1. Real-Time Ingestion with Apache Kafka

Set up Kafka producers to publish raw transaction events in real-time:

Kafka topics:

transactions_raw

flagged_transactions

alerts

2. Stream Processing & Transformation

Use a stream processor (e.g., Spark Structured Streaming, Apache Flink, or Databricks Delta Live Tables) to:

Clean and enrich the data

Join with lookup tables (user risk score, device ID, geo history)

Format events for scoring

🛠️ Streaming pipeline code samples will be added soon.

3. Anomaly Detection Logic

Rule-Based Detection (Simple + Fast)

Multiple failed login + large transaction within 5 mins

New location + unusually high value

Transaction split across multiple cards/accounts

ML-Based Detection (Advanced)

Train fraud detection models using historic labeled data

Score transactions in real-time and flag anything over a threshold

Use features like transaction velocity, geo deviations, network graph signals

🧠 You can run models in Spark, Databricks, or even Snowflake ML in downstream layers.

4. Alerting and Response

Flagged events are written to a new Kafka topic (e.g., alerts_fraud), and pushed to:

Slack / Teams alerts

Email notifications

Internal dashboards (e.g., Streamlit, Grafana, Tableau, Power-BI)

Case management systems

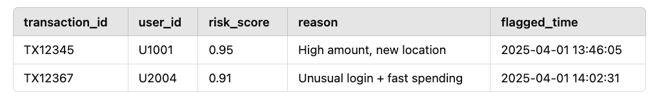

📋 Example Detection Outcome Table

🔐 Compliance, Logging & Governance

Kafka retains the full transaction history (immutable log)

All decisions (rule or ML-based) are logged with timestamps

Supports compliance audits and forensic traceability

Data lineage tracked from ingestion → decision → alert

🎯 Key Benefits

✅ Near real-time detection of suspicious activity

✅ Modular: rules or ML models can evolve independently

✅ Scalable: Kafka handles high volume from banking or ecommerce apps

✅ Auditable: logs and triggers are fully trackable

✅ Integrates easily with fraud teams, dashboards, or case systems

📌 Conclusion

Building a real-time financial crime detection system is no longer reserved for banks with massive engineering teams. With tools like Apache Kafka, stream processors, and modern data platforms, you can:

Detect fraud as it happens

Respond quickly to minimize damage

Stay compliant with AML and reporting regulations

Empower fraud teams with live insights and alerts

📎 Artefacts

Sample Kafka producer/consumer scripts

Detection rules template (SQL + Spark)

Architecture diagram

Streamlit UI for fraud investigation

GitHub starter project

ACTUVATE PTY LTD

Delivering strategic data architecture, cloud engineering, and AI-driven solutions

Connect

Insights

contact@actuvate.com.au

© 2024. All rights reserved.